GCP

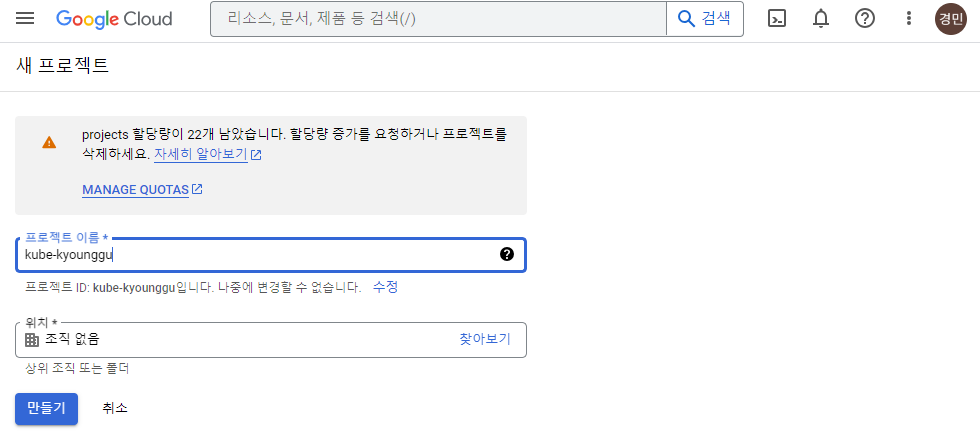

새 프로젝트 생성

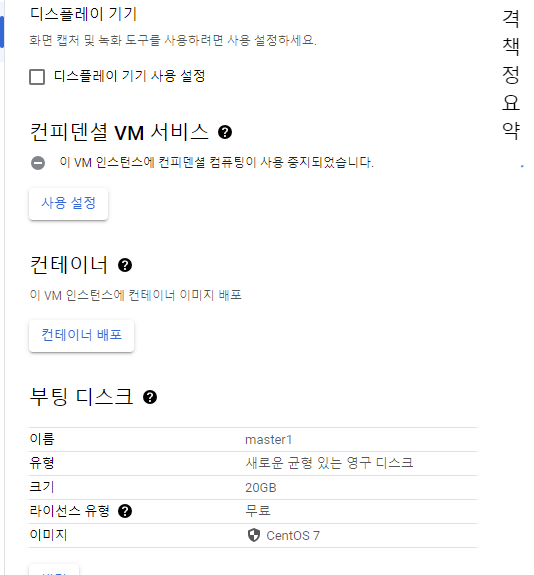

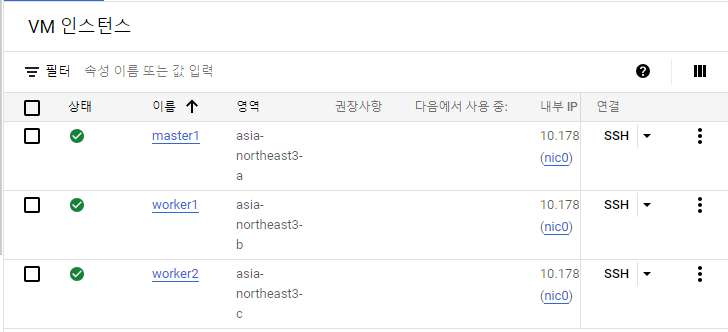

똑같은 스펙으로 worker1(asia-northeast3-b), worker2(asia-northeast3-c)도 생성.

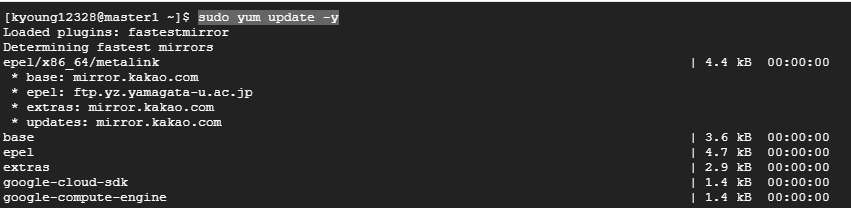

# 모든 VM에서 업데이트

sudo yum update -y

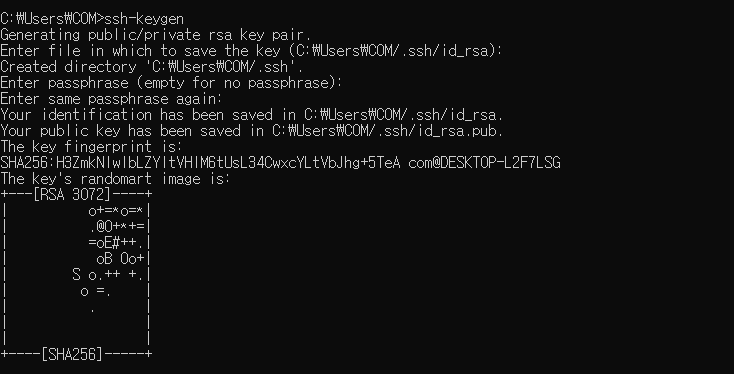

# 윈도우 cmd창에서

ssh-keygen

생성된 'id_rsa.pub'을 메모장으로 켜서 복사.

GCP - 멀티 노드

// VM 아이피 정리

master1 (a)

10.178.0.2 34.64.39.169

worker1 (b)

10.178.0.3 34.64.99.212

worker2 (c)

10.178.0.4 34.64.106.92

//

## MobaXterm으로 외부 아이피를 통해 접속

# 모든 VM에서 사용자 root로 변경

sudo su - root

# 모든 VM에 호스트 정보 입력 (노드를 사용하기 위해)

cat <<EOF >> /etc/hosts

10.178.0.2 master1

10.178.0.3 worker1

10.178.0.4 worker2

EOF

# SELinux 비활성화

setenforce 0

vi /etc/selinux/config

//

SELINUX=disabled

//

# 도커 설치 및 세팅

#curl https://download.docker.com/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker-ce.repo

#sed -i -e "s/enabled=1/enabled=0/g" /etc/yum.repos.d/docker-ce.repo

#yum --enablerepo=docker-ce-stable -y install docker-ce-19.03.15-3.el7

#cat <<EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

#systemctl enable --now docker

#systemctl daemon-reload

#systemctl restart docker

#systemctl disable --now firewalld

#swapoff -a

#sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

#sed -i '/ swap / s/^/#/' /etc/fstab

#cat <<EOF > /etc/sysctl.d/k8s.conf # kubernetes

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

#sysctl --system

#reboot

#sudo su - root

# cat <<'EOF' > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-$basearch

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

# yum -y install kubeadm-1.19.16-0 kubelet-1.19.16-0 kubectl-1.19.16-0 --disableexcludes=kubernetes

# systemctl enable --now kubeletGCP - 마스터 노드

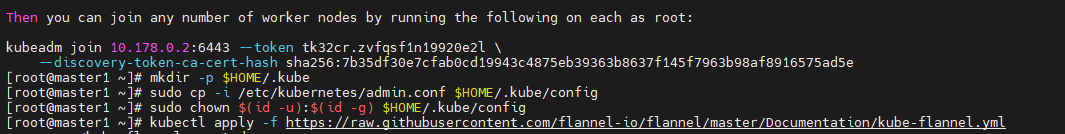

## 마스터 노드에서

#kubeadm init --apiserver-advertise-address=10.178.0.2 --pod-network-cidr=10.244.0.0/16

## 토큰 정보 복사해놓기

//

kubeadm join 10.178.0.2:6443 --token tk32cr.zvfqsf1n19920e2l \

--discovery-token-ca-cert-hash sha256:7b35df30e7cfab0cd19943c4875eb39363b8637f145f7963b98af8916575ad5e

//

## 출력된 명렁어 그대로 입력

# kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

# yum install -y bash-completion git wget unzip mysql

## 모든 노드에서

sysctl -w net.ipv4.ip_forward=1

# 마스터 노드에 자동완성 설치

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

exit

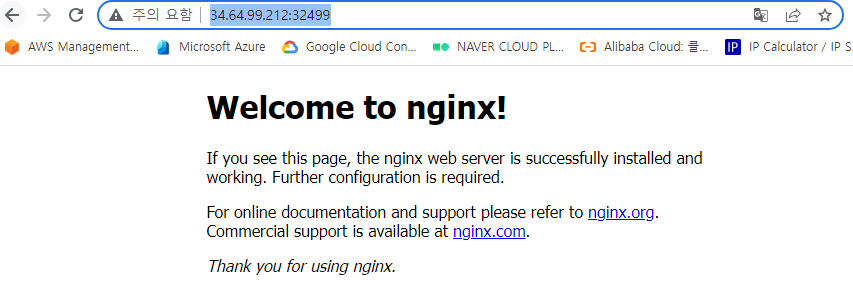

GCP - 워커 노드

# 워커1,2 노드에 토큰 정보 입력

kubeadm join 10.178.0.2:6443 --token tk32cr.zvfqsf1n19920e2l \

--discovery-token-ca-cert-hash sha256:7b35df30e7cfab0cd19943c4875eb39363b8637f145f7963b98af8916575ad5eGCP 멀티 노드 사용

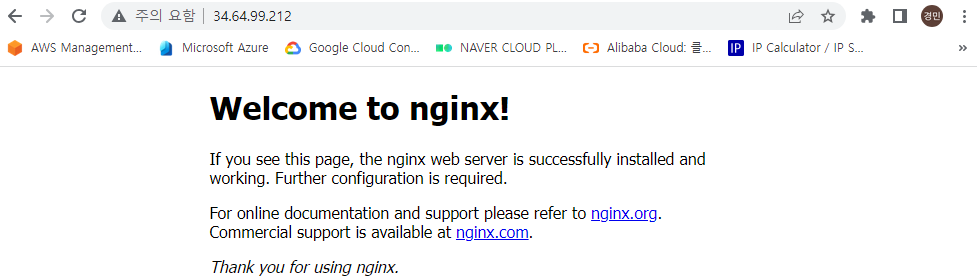

# 퍼블릭 아이피와 1:1로 매칭된 내부 아아피를 넣는 것이 포인트

kubectl expose pod nginx-pod --name loadbalancer --type=LoadBalancer --external-ip 10.178.0.3 --port 80

## 편의성을 의해 줄임말 추가

# 명령어 별칭 추가 ( kubectl -> k)

vi ~/.bashrc

//

alias k='kubectl'

complete -o default -F __start_kubectl k

//

# exit

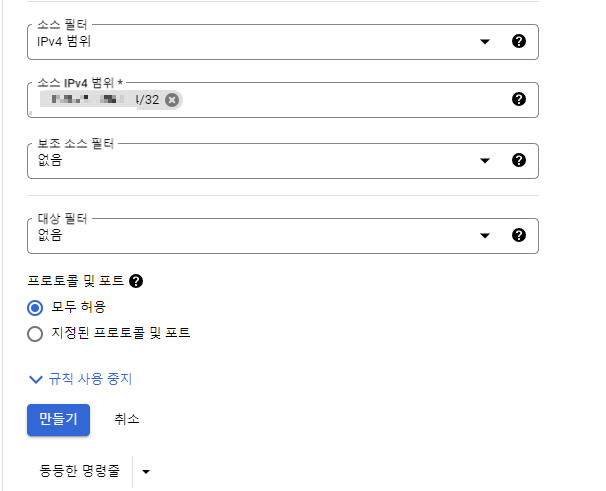

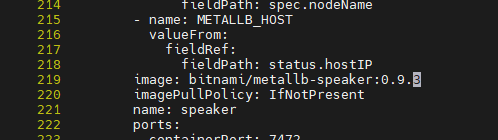

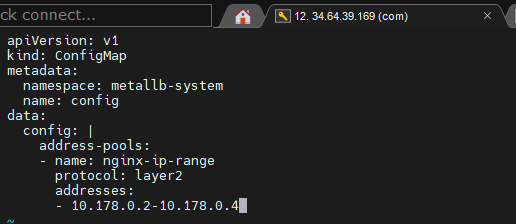

GCP - metallb

# 마스터 노드에서

git clone https://github.com/hali-linux/_Book_k8sInfra.git

vi /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

//

image: bitnami/metallb-speaker:0.9.3

//

image: bitnami/metallb-controller:0.9.3

//

kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

# vi metallb-l2config.yaml

//

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: nginx-ip-range

protocol: layer2

addresses:

- 10.178.0.2-10.178.0.4

//

# kubectl apply -f metallb-l2config.yaml

GCP - Deployment

# mkdir workspace & cd $_

# vi deployment.yaml

//

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 4

selector:

matchLabels:

app: nginx-deployment

template:

metadata:

name: nginx-deployment

labels:

app: nginx-deployment

spec:

containers:

- name: nginx-deployment-container

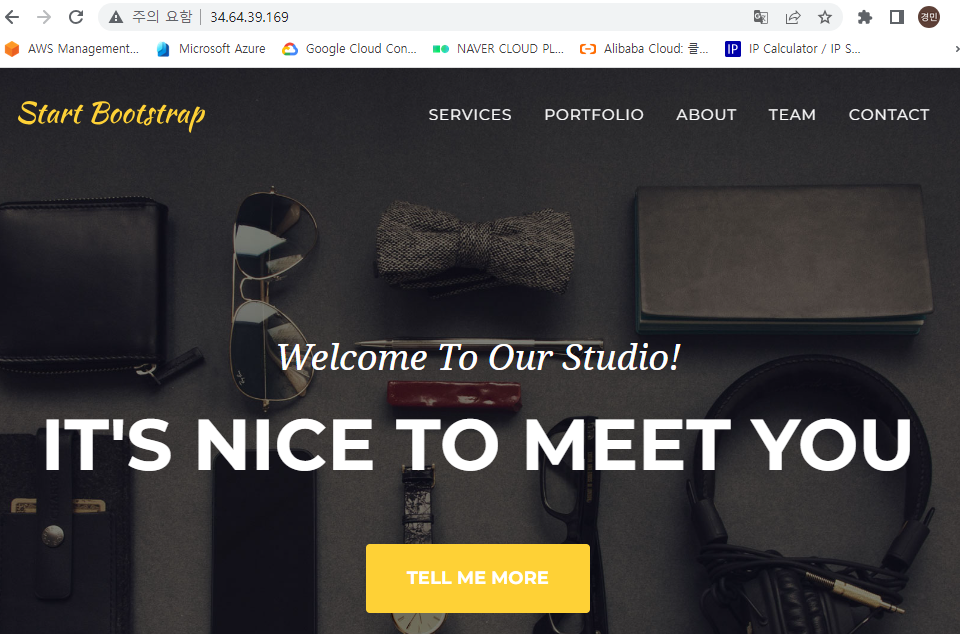

image: kyounggu/web-site:aws

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-deployment

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.0.143

selector:

app: nginx-deployment

ports:

- protocol: TCP

port: 80

targetPort: 80

//

# kubectl apply -f deployment.yaml

# scale out

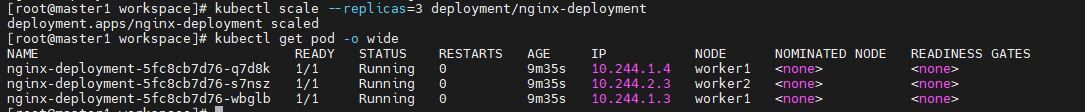

kubectl scale --current-replicas=4 --replicas=6 deployment/nginx-deployment

# scale in

kubectl scale --replicas=3 deployment/nginx-deployment

## Deployment 롤링 업데이트 제어

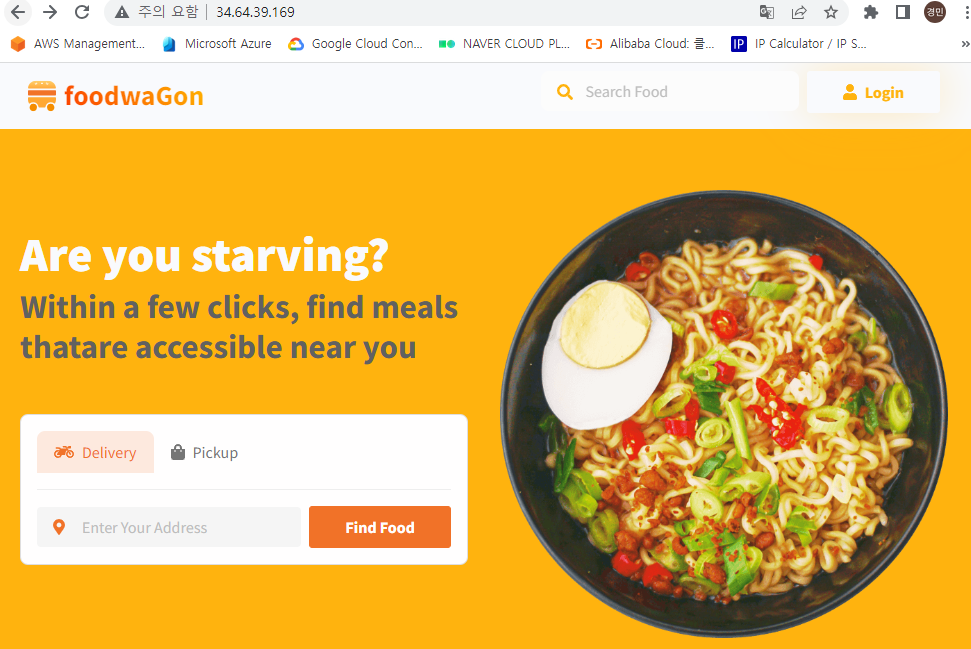

# kubectl set image deployment.apps/nginx-deployment nginx-deployment-container=kyounggu/web-site:food

# kubectl set image deployment.apps/nginx-deployment nginx-deployment-container=nginx

# kubectl rollout history deployment nginx-deployment

# kubectl rollout history deployment nginx-deployment --revision=2 # 리비전2 상세보기

# kubectl rollout undo deployment nginx-deployment # 롤백(전 단계로 복원)

# kubectl rollout undo deployment nginx-deployment --to-revision=1 # 리비전1로 복원

GCP - multi-container

# vi multipod.yaml

//

apiVersion: v1

kind: Pod

metadata:

name: multipod

spec:

containers:

- name: nginx-container #1번째 컨테이너

image: nginx:1.14

ports:

- containerPort: 80

- name: centos-container #2번째 컨테이너

image: centos:7

command:

- sleep

- "10000"

//

# kubectl apply -f multipod.yaml

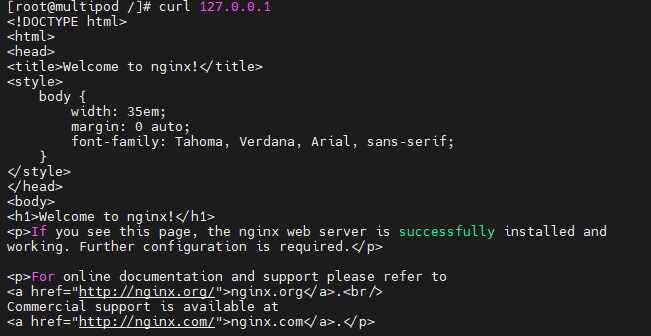

# 멀티 컨테이너에서 centos-container 접속

kubectl exec -it multipod -c centos-container -- bash

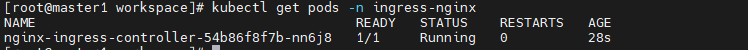

GCP - Ingress

# kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.2/ingress-nginx.yaml

# kubectl get pods -n ingress-nginx

## GCP는 사설 레지스트리를 사용할 수 없으므로 기존 docker에서 도커 허브로 test-home을 업로드

# vi ingress-deploy.yaml

//

apiVersion: apps/v1

kind: Deployment

metadata:

name: foods-deploy

spec:

replicas: 1

selector:

matchLabels:

app: foods-deploy

template:

metadata:

labels:

app: foods-deploy

spec:

containers:

- name: foods-deploy

image: kyounggu/test-home:v1.0

---

apiVersion: v1

kind: Service

metadata:

name: foods-svc

spec:

type: ClusterIP

selector:

app: foods-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sales-deploy

spec:

replicas: 1

selector:

matchLabels:

app: sales-deploy

template:

metadata:

labels:

app: sales-deploy

spec:

containers:

- name: sales-deploy

image: kyounggu/test-home:v2.0

---

apiVersion: v1

kind: Service

metadata:

name: sales-svc

spec:

type: ClusterIP

selector:

app: sales-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: home-deploy

spec:

replicas: 1

selector:

matchLabels:

app: home-deploy

template:

metadata:

labels:

app: home-deploy

spec:

containers:

- name: home-deploy

image: kyounggu/test-home:v0.0

---

apiVersion: v1

kind: Service

metadata:

name: home-svc

spec:

type: ClusterIP

selector:

app: home-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

//

# kubectl apply -f ingress-deploy.yaml

# vi ingress-config.yaml

//

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /foods

backend:

serviceName: foods-svc

servicePort: 80

- path: /sales

backend:

serviceName: sales-svc

servicePort: 80

- path:

backend:

serviceName: home-svc

servicePort: 80

//

# kubectl apply -f ingress-config.yaml

# vi ingress-service.yaml

//

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress-controller-svc

namespace: ingress-nginx

spec:

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

- name: https

protocol: TCP

port: 443

targetPort: 443

selector:

app.kubernetes.io/name: ingress-nginx

type: LoadBalancer

# externalIPs:

# - 192.168.2.95 # master1의 아이피

//

# kubectl apply -f ingress-service.yaml

# kubectl -n ingress-nginx get all

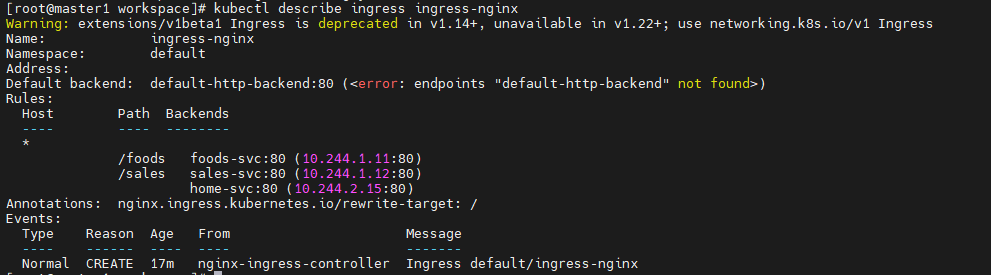

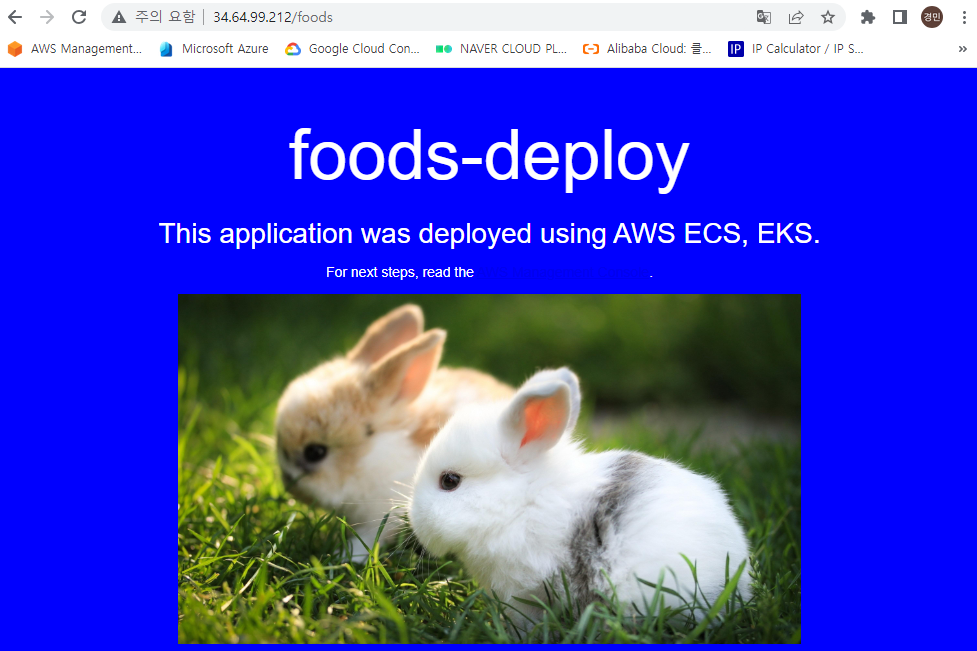

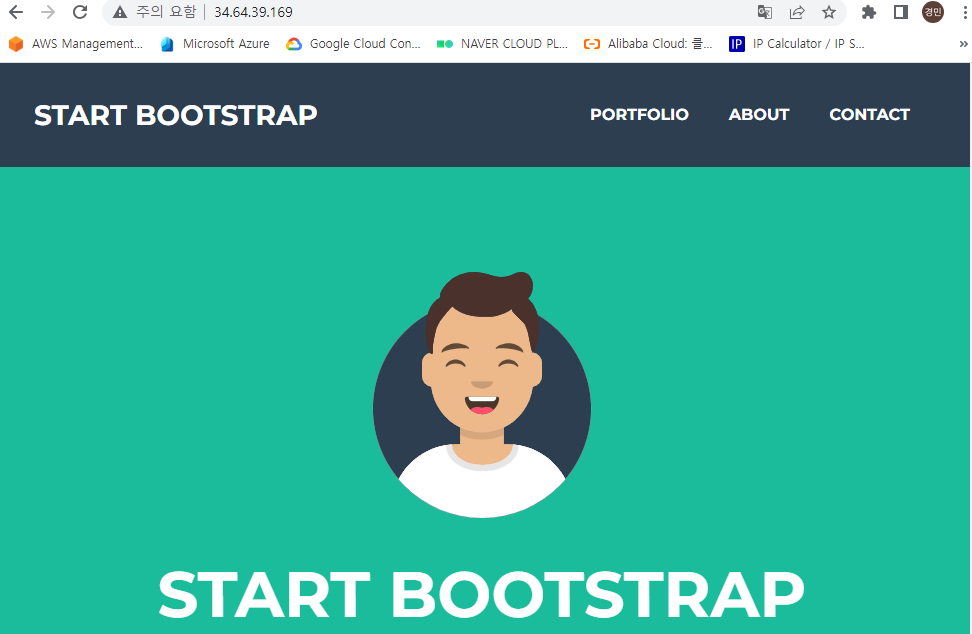

## ingress 서비스의 아이피 확인해서 대응하는 외부아이피로 접속

경로 기반 라우팅이 정상적으로 작동한다.

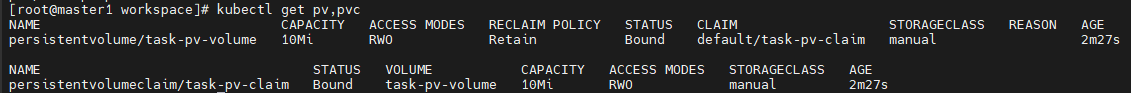

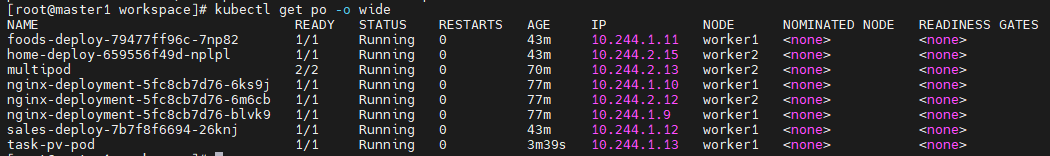

GCP - Persistant Volume

# pv-pvc-pod.yaml

//

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Mi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Mi

selector:

matchLabels:

type: local

---

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

labels:

app: task-pv-pod

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

containers:

- name: task-pv-container

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage

//

# kubectl apply -f pv-pvc-pod.yaml

##배치된 노드의 마운트 폴더에 웹 파일 넣기

## 로드밸런서의 셀렉터를 파드 'task-pv-pod'로 변경

# kubectl edit svc loadbalancer-service-deployment

//

selector:

app: task-pv-pod

//

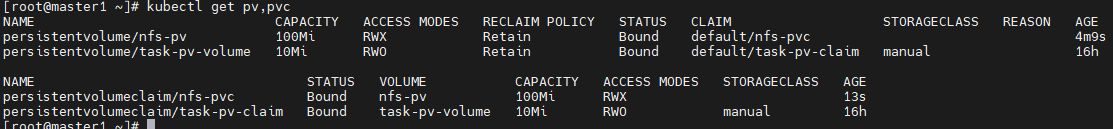

GCP - NFS

## 모든 노드에서 설치

#yum install -y nfs-utils.x86_64

## 마스터 노드에서

# mkdir /nfs_shared

# chmod 777 /nfs_shared/

# echo '/nfs_shared 10.178.0.0/20(rw,sync,no_root_squash)' >> /etc/exports

# systemctl enable --now nfs

# vi nfs-pv.yaml

//

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 100Mi

accessModes:

- ReadWriteMany # pvc와 똑같이 맞춰주면 셀렉터 없이 연결고리가 된다

persistentVolumeReclaimPolicy: Retain

nfs:

server: 10.178.0.2 # master 노드의 내부 아이피

path: /nfs_shared

//

# kubectl apply -f nfs-pv.yaml

# kubectl get pv

# vi nfs-pvc.yaml

//

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteMany # pv와 똑같이 맞춰주면 셀렉터 없이 연결고리가 된다

resources:

requests:

storage: 10Mi

//

# kubectl apply -f nfs-pvc.yaml

# kubectl get pv,pvc

# vi nfs-pvc-deploy.yaml

//

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-pvc-deploy

spec:

replicas: 4

selector:

matchLabels:

app: nfs-pvc-deploy

template:

metadata:

labels:

app: nfs-pvc-deploy

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nfs-vol

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-vol

persistentVolumeClaim:

claimName: nfs-pvc

//

# kubectl apply -f nfs-pvc-deploy.yaml

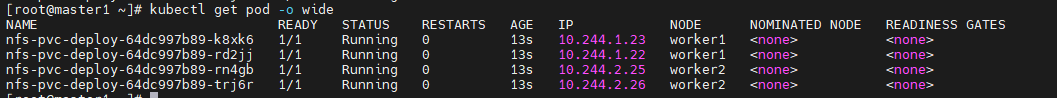

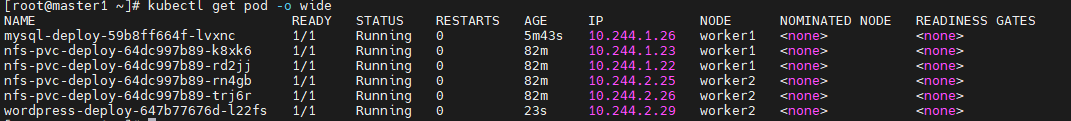

# kubectl get pod -o wide

# kubectl exec -it [생성된 파드 이름] -- /bin/bash

# kubectl expose deployment nfs-pvc-deploy --type=LoadBalancer --name=nfs-pvc-deploy-svc1 --port=80

## 마운트된 폴더 '/nfs_shared/'에 웹사이트 파일 업로드

GCP - configMap

#vi configmap-dev.yaml

//

apiVersion: v1

kind: ConfigMap

metadata:

name: config-dev

namespace: default

data:

DB_URL: localhost

DB_USER: myuser

DB_PASS: mypass

DEBUG_INFO: debug

//

# kubectl apply -f configmap-dev.yaml

# kubectl describe configmaps config-dev

# vi configmap-wordpress.yaml

//

apiVersion: v1

kind: ConfigMap

metadata:

name: config-wordpress

namespace: default

data:

MYSQL_ROOT_HOST: '%'

MYSQL_DATABASE: wordpress

//

# kubectl apply -f configmap-wordpress.yaml

# kubectl describe configmaps config-wordpress

# secret으로 인해 describe으로 값이 나타나지 않음

vi secret.yaml

//

apiVersion: v1

kind: Secret

metadata:

name: my-secret

stringData:

MYSQL_ROOT_PASSWORD: mode1752

MYSQL_USER: wpuser

MYSQL_PASSWORD: wppass

//

# kubectl apply -f secret.yaml

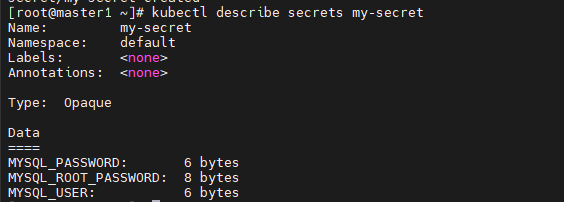

# kubectl describe secrets my-secret

# vi mysql-pod-svc.yaml

//

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod

labels:

app: mysql-pod

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom:

- configMapRef:

name: config-wordpress

- secretRef: # 민감한 정보는 secret을 참조

name: my-secret

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-pod

ports:

- protocol: TCP

port: 3306

targetPort: 3306

//

# kubectl apply -f mysql-pod-svc.yaml

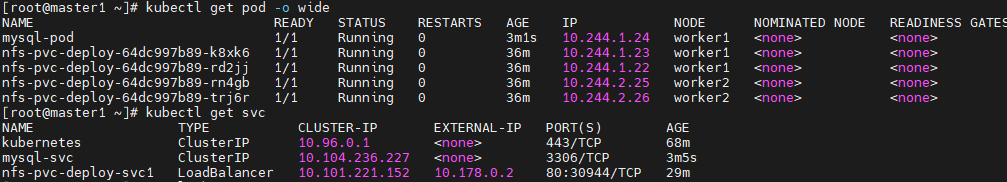

# kubectl get pod -o wide

# kubectl get svc

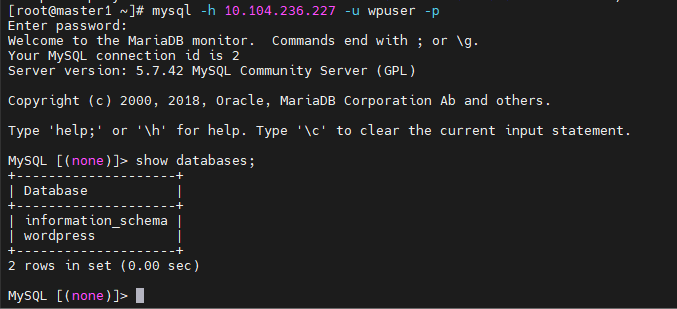

# mysql -h [mysql의 클러스터 아이피] -u wpuser -p

# vi wordpress-pod-svc.yaml

//

apiVersion: v1

kind: Pod

metadata:

name: wordpress-pod

labels:

app: wordpress-pod

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_HOST

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef: # 전과 달리 configMap이 아닌 secret 참조

name: my-secret

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: my-secret

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.56.103

selector:

app: wordpress-pod

ports:

- protocol: TCP

port: 80

targetPort: 80

//

# kubectl apply -f wordpress-pod-svc.yaml

# kubectl get pod -o wide

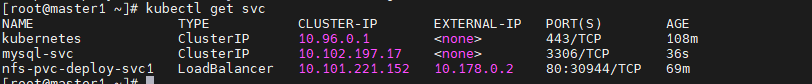

# kubectl get svc

## Deployment로 생성하기 위해 삭제

# kubectl delete -f mysql-pod-svc.yaml

# kubectl delete -f wordpress-pod-svc.yaml

# vi mysql-deploy-svc.yaml

//

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-deploy

labels:

app: mysql-deploy

spec:

replicas: 1

selector:

matchLabels:

app: mysql-deploy

template:

metadata:

labels:

app: mysql-deploy

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom:

- configMapRef:

name: config-wordpress

- secretRef:

name: my-secret

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-deploy

ports:

- protocol: TCP

port: 3306

targetPort: 3306

//

# kubectl apply -f mysql-deploy-svc.yaml

# kubectl get pod -o wide

# kubectl get svc

# vi wordpress-deploy-svc.yaml

//

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress-deploy

labels:

app: wordpress-deploy

spec:

replicas: 1

selector:

matchLabels:

app: wordpress-deploy

template:

metadata:

labels:

app: wordpress-deploy

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_HOST

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

name: my-secret

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: my-secret

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.1.102

selector:

app: wordpress-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

//

# kubectl apply -f wordpress-deploy-svc.yaml

# kubectl get pod -o wide

# kubectl get svc

# mysql -h [mysql 클러스터 아이피] -u wpuser -p

GCP - ResourceQuota

## 초기화

# kubectl delete all --all

# kubectl create namespace kyounggu

# vi sample-resourcequota.yaml

//

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota

namespace: kyounggu

spec:

hard:

count/pods: 5

//

# kubectl apply -f sample-resourcequota.yaml

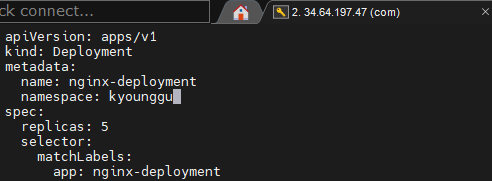

# vi deployment.yaml

//

네임스페이스 추가

//

replicas를 5로 변경

//

# kubectl apply -f deployment.yaml

# kubectl -n kyounggu scale deployment nginx-deployment --replicas=6

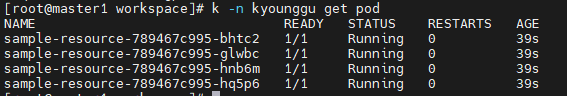

# kubectl -n kyounggu get pod

# kybectl delete -f deployment.yaml

# kubectl -n kyounggu delete resourcequotas sample-resourcequota

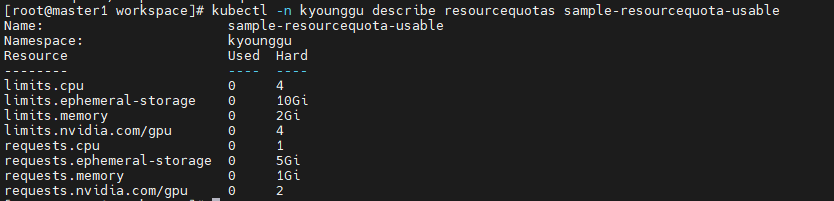

# vi sample-resourcequota-usable.yaml

//

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota-usable

namespace: kyounggu

spec:

hard:

requests.cpu: "1" # 네임스페이스의 모든 파드에 대한 총 CPU 요청량은 1 cpu를 초과해서는 안된다.

requests.memory: 1Gi # 네임스페이스의 모든 파드에 대한 총 메모리 요청량은 1GiB를 초과하지 않아야 한다.

requests.ephemeral-storage: 5Gi

requests.nvidia.com/gpu: 2

limits.cpu: 4 # 네임스페이스의 모든 파드에 대한 총 CPU 상한은 4 cpu를 초과해서는 안된다.

limits.memory: 2Gi # 네임스페이스의 모든 파드에 대한 총 메모리 상한은 2GiB를 초과하지 않아야 한다.

limits.ephemeral-storage: 10Gi

limits.nvidia.com/gpu: 4

//

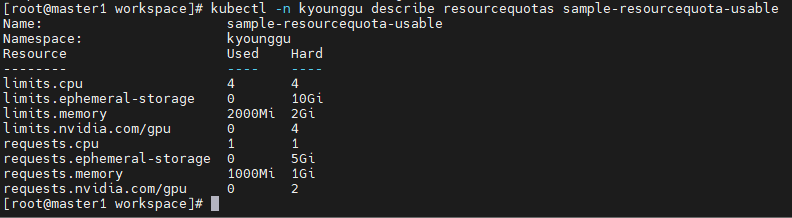

# kubectl -n kyounggu describe resourcequotas sample-resourcequota-usable

# kubectl run new-nginx1 --image=nginx -n kyounggu

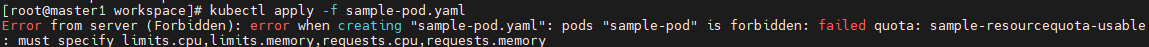

# vi sample-pod.yaml

//

apiVersion: v1

kind: Pod

metadata:

name: sample-pod

namespace: kyounggu

spec:

containers:

- name: nginx-container

image: nginx

//

# kubectl apply -f sample-pod.yaml

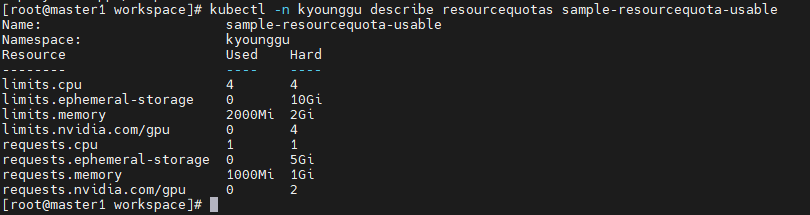

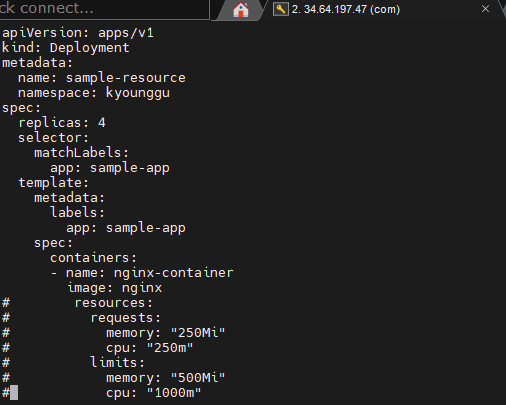

# vi sample-resource.yaml

//

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-resource

namespace: kyounggu

spec:

replicas: 4

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- name: nginx-container

image: nginx

resources:

requests:

memory: "250Mi"

cpu: "250m"

limits:

memory: "500Mi"

cpu: "1000m"

//

# kubectl apply -f sample-resource.yaml

# kubectl -n kyounggu describe resourcequotas sample-resourcequota-usable

# kubectl delete -f sample-resource.yaml

GCP - LimitRange

# vi sample-limitrange-container.yaml

//

apiVersion: v1

kind: LimitRange

metadata:

name: sample-limitrange-container

namespace: kyounggu

spec:

limits:

- type: Container

default:

memory: 500Mi

cpu: 1000m

defaultRequest:

memory: 250Mi

cpu: 250m

max:

memory: 500Mi

cpu: 1000m

min:

memory: 250Mi

cpu: 250m

maxLimitRequestRatio:

memory: 2

cpu: 4

//

# kubectl apply -f sample-limitrange-container.yaml

# kubectl -n kyounggu describe limitranges sample-limitrange-container

# vi sample-resource.yaml

//

리소스 부분 주석 처리

//

# kubectl apply -f sample-resource.yaml

# kubectl -n kyounggu get pod

# kubectl -n kyounggu describe resourcequotas sample-resourcequota-usable

# kubectl delete -f sample-resource.yaml

# kubectl delete -f sample-resourcequota-usable.yaml

# kubectl delete -f sample-limitrange-container.yaml

GCP - 스케줄링

GCP - 파드 스케줄(자동 배치)

# vi pod-schedule.yaml

//

apiVersion: v1

kind: Pod

metadata:

name: pod-schedule-metadata

labels:

app: pod-schedule-labels

spec:

containers:

- name: pod-schedule-containers

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: pod-schedule-service

spec:

type: NodePort

selector:

app: pod-schedule-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

//

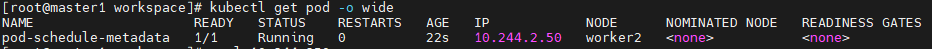

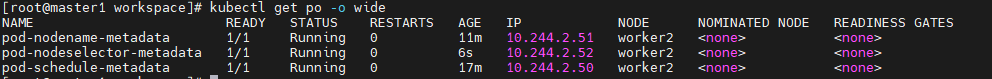

# kubectl get pod -o wide

# curl [파드의 아이피]

GCP - 파드 노드네임(수동 배치)

# vi pod-nodename.yaml

//

apiVersion: v1

kind: Pod

metadata:

name: pod-nodename-metadata

labels:

app: pod-nodename-labels

spec:

containers:

- name: pod-nodename-containers

image: nginx

ports:

- containerPort: 80

nodeName: worker2 # 이미 파드가 있는 노드로 설정

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodename-service

spec:

type: NodePort

selector:

app: pod-nodename-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

//

# kubectl apply -f pod-nodename.yaml

# kubectl get pod -o wide

GCP - 노드 셀렉터(수동 배치)

# kubectl get pod --show-labels

# kubectl get pod --selector=app=pod-nodename-labels

# 워커2에 라벨 부여

kubectl label nodes worker2 tier=dev

# kubectl get nodes --show-labels

# vi pod-nodeselector.yaml

//

apiVersion: v1

kind: Pod

metadata:

name: pod-nodeselector-metadata

labels:

app: pod-nodeselector-labels

spec:

containers:

- name: pod-nodeselector-containers

image: nginx

ports:

- containerPort: 80

nodeSelector:

tier: dev

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodeselector-service

spec:

type: NodePort

selector:

app: pod-nodeselector-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

//

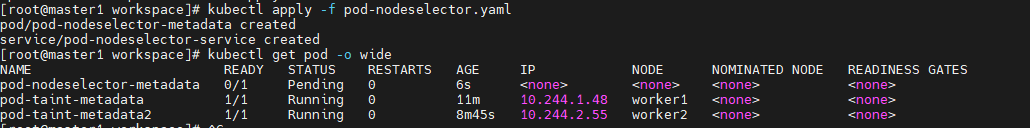

# kubectl apply -f pod-nodeselector.yaml

# kubectl get po -o wide

# kubectl label nodes worker2 tier-

# kubectl get nodes --show-labels

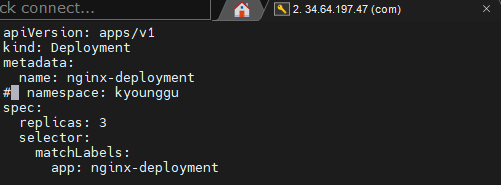

vi deployment.yaml

//

네임 스페이스 주석 처리

레플리카스를 3으로 조정

//

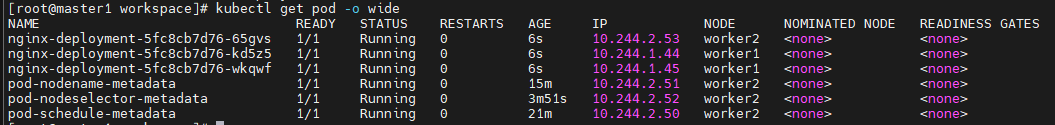

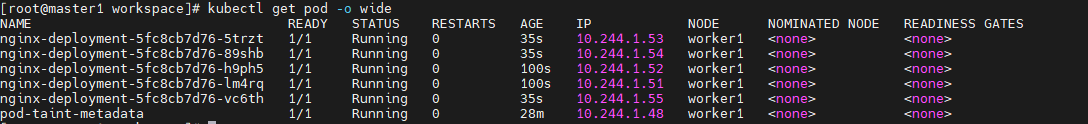

# kubectl apply -f deployment.yaml

# kubectl get pod -o wide

GCP - taint와 toleration

# kubectl delete all --all

# kubectl taint node worker1 tier=dev:NoSchedule

# kubectl taint node worker2 tier=prod:NoSchedule

# kubectl describe nodes worker1 | grep -i Taints

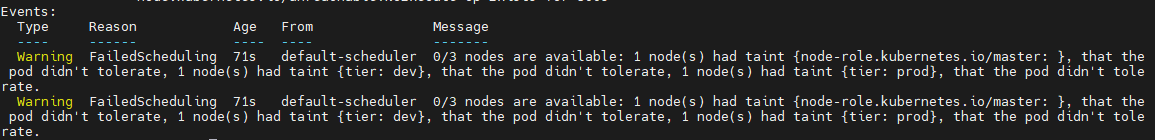

# 모든 노드에 taint가 있으므로 생성되지 못한다.(Pending)

kubectl apply -f sample-pod.yaml

# kubectl get pod -o wide -n kyounggu

# kubectl -n kyounggu describe pod sample-pod

# vi pod-taint.yaml

//

apiVersion: v1

kind: Pod

metadata:

name: pod-taint-metadata

labels:

app: pod-taint-labels

spec:

containers:

- name: pod-taint-containers

image: nginx

ports:

- containerPort: 80

tolerations:

- key: "tier"

operator: "Equal"

value: "dev"

effect: "NoSchedule"

---

apiVersion: v1

kind: Service

metadata:

name: pod-taint-service

spec:

type: NodePort

selector:

app: pod-taint-labels

ports:

- protocol: TCP

port: 80

targetPort: 80

//

# kubectl apply -f pod-taint.yaml

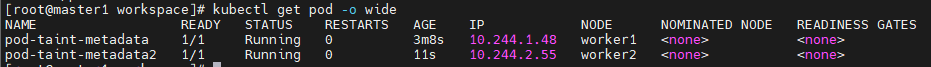

# kubectl get pod -o wide

# cp pod-taint.yaml pod-taint2.yaml

# vi pod-taint2.yaml

//

apiVersion: v1

kind: Pod

metadata:

name: pod-taint-metadata2

labels:

app: pod-taint-labels2

spec:

containers:

- name: pod-taint-containers2

image: nginx

ports:

- containerPort: 80

tolerations:

- key: "tier"

operator: "Equal"

value: "prod"

effect: "NoSchedule"

---

apiVersion: v1

kind: Service

metadata:

name: pod-taint-service2

spec:

type: NodePort

selector:

app: pod-taint-labels2

ports:

- protocol: TCP

port: 80

targetPort: 80

//

# kubectl apply -f pod-taint2.yaml

## taint 제거

# kubectl taint node worker1 tier=dev:NoSchedule-

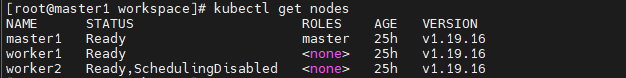

# kubectl taint node worker2 tier=prod:NoSchedule-GCP - cordon

# worker2에 cordon을 사용하여 더 이상 스케줄링이 안되게 설정

kubectl cordon worker2

# worker2에 cordon 해제

kubectl uncordon worker2

GCP - drain

# drain 사용

kubectl drain worker2 --ignore-daemonsets --force --delete-local-data

# uncordon을 이용하여 drain으로 인한 스케줄링 불가 상태 해제

kubectl uncordon worker2

GCP - 모니터링

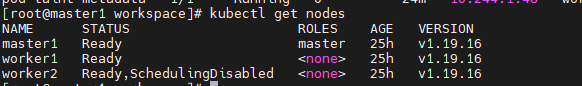

GCP - 프로메테우스 설치

# 메트릭 서버 설치

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.1/components.yaml

# 암호화부분 수정

kubectl edit deployments.apps -n kube-system metrics-server

// args에 추가

--kubelet-insecure-tls

//

# kubectl top node# monitoring 네임 스페이스 생성

kubectl create ns monitoring

# git에서 내려받기

cd

git clone https://github.com/hali-linux/my-prometheus-grafana.git

cd my-prometheus-grafana

## 프로메테우스 설치

kubectl apply -f prometheus-cluster-role.yaml

kubectl apply -f prometheus-config-map.yaml

kubectl apply -f prometheus-deployment.yaml

kubectl apply -f prometheus-node-exporter.yaml # 에이전트 역할

kubectl apply -f prometheus-svc.yaml

kubectl get pod -n monitoring -o wide

kubectl apply -f kube-state-cluster-role.yaml # kube-state: 에이전트 역할

kubectl apply -f kube-state-deployment.yaml

kubectl apply -f kube-state-svcaccount.yaml

kubectl apply -f kube-state-svc.yaml

kubectl get pod -n kube-system

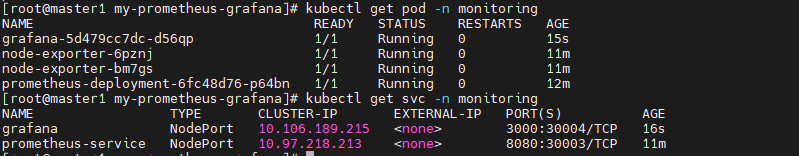

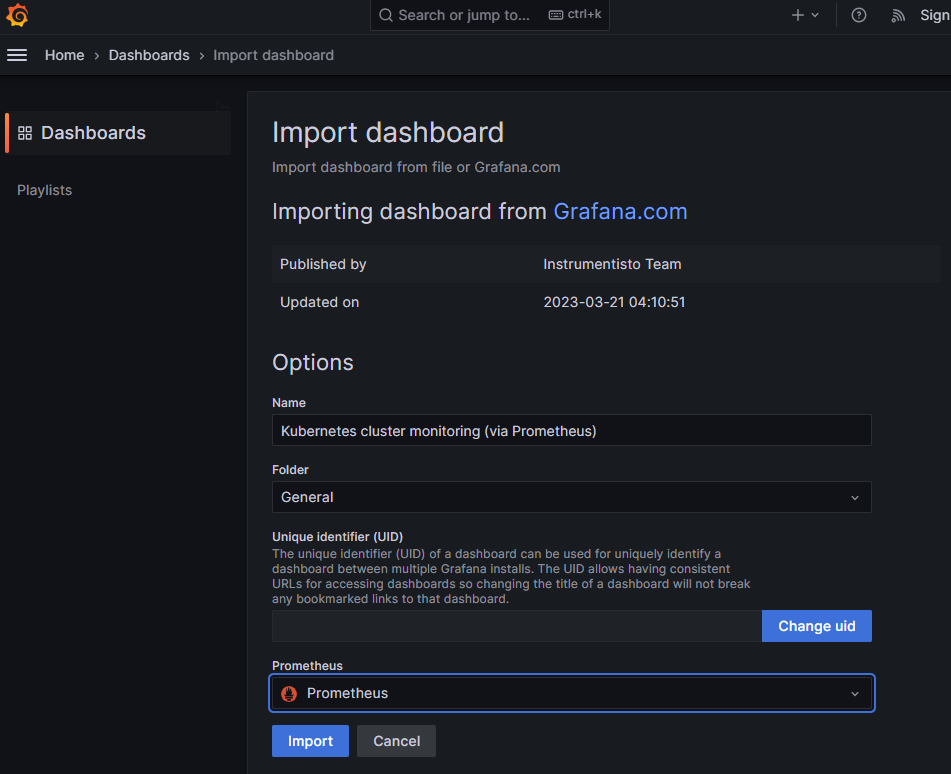

그라파나 설치

# 그라파나 설치

kubectl apply -f grafana.yaml

# kubectl get pod -n monitoring

# kubectl get svc -n monitoring

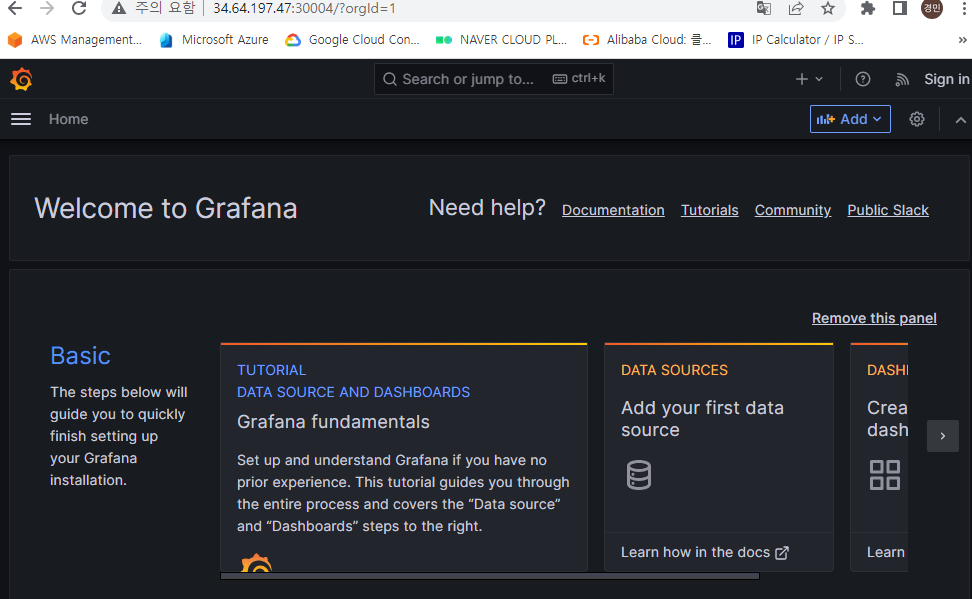

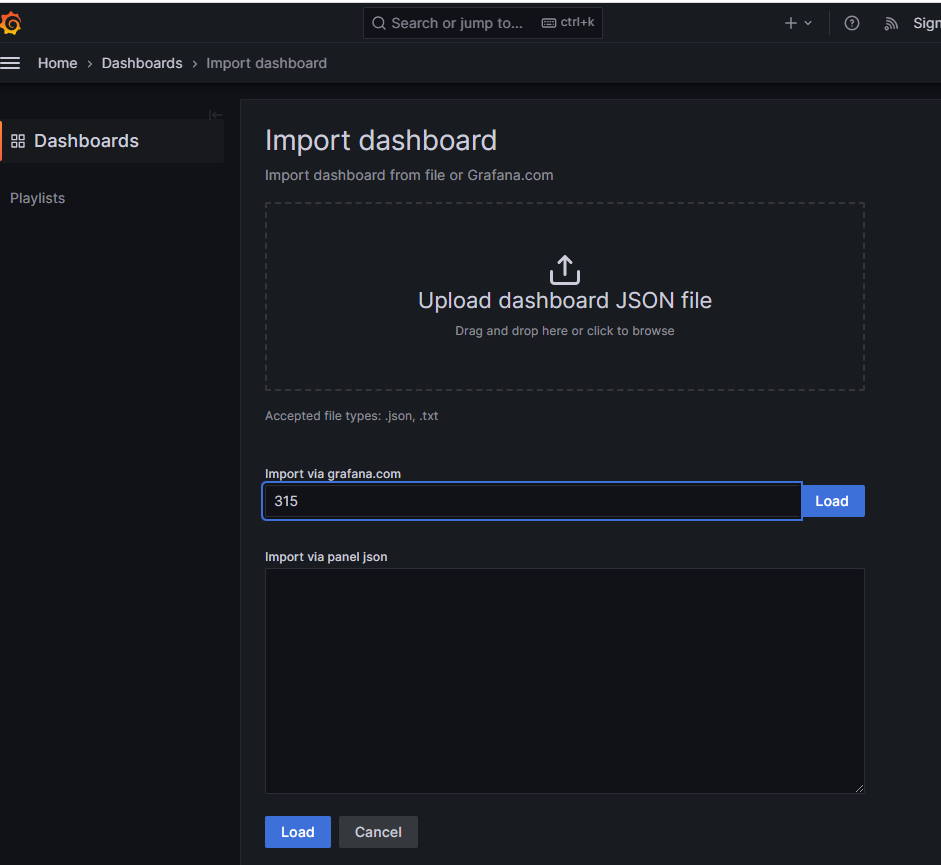

그라파나 사용

GCP - 오토 스케일링

GCP - 오토 스케일링 (HPA)

# php-apache 템플릿

vi php-apache.yaml

//

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

replicas: 2 # Desired capacity, 시작 갯수

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: k8s.gcr.io/hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

//

# kubectl apply -f php-apache.yaml

# kubectl get pod -o wide

# 오토 스케일링 템플릿 생성

vi hpa.yaml

//

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

spec:

maxReplicas: 4

minReplicas: 2

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

targetCPUUtilizationPercentage: 50

status:

currentCPUUtilizationPercentage: 0

currentReplicas: 2

desiredReplicas: 2

//

# kubectl apply -f hpa.yaml# 부하를 주기 위한 테스트용 임시 파드 생성 (--rm: 종료시 삭제 --restart=Never: 일회성)

kubectl run -i --tty load-generator --rm --image=busybox:1.28 --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"

## master1 콘솔창을 복사해서 모니터링

watch kubectl get all -o wide

'메가존 클라우드 2기 교육 > 실무 특화' 카테고리의 다른 글

| CI / CD - Jenkins, Gti Lab (0) | 2023.06.14 |

|---|---|

| Kubernetes - Amazon EKS (0) | 2023.06.12 |

| Kubernetes - 모니터링(Prometheus, Grafana), 오토스케일링(HPA) (0) | 2023.06.08 |

| Kubernetes - 스케줄링(taint & toleration, cordon , drain), (0) | 2023.06.07 |

| Kubernetes - NFS, configMap, namepsace, ResourceQuota (0) | 2023.06.05 |